Use cases for graph databases

What are Graph Databases?

A graph database stores information as relationships between entities, and represents this data using nodes and edges, instead of using rows and columns in tables. For example, a database of customers, orders and products:

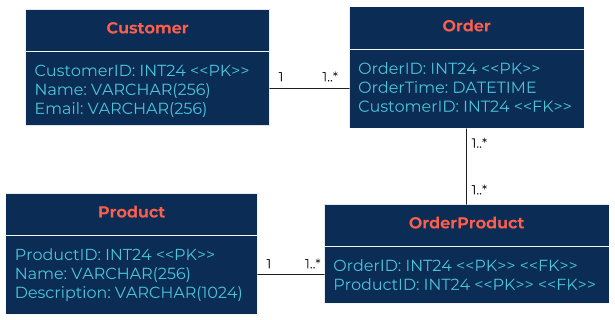

Figure 1. Customer and order data as relational database tables.

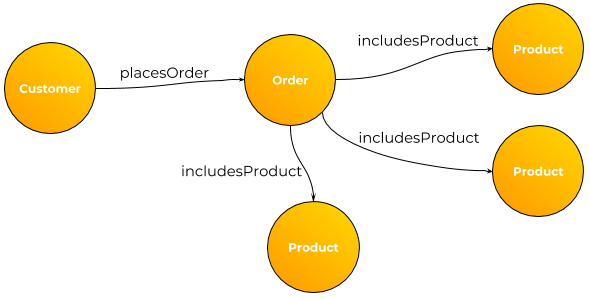

Figure 2. Customer and order data as a graph.

Figure 1 illustrates a typical relational database with four linked tables representing the relationship between customers who make orders, which are made up of products.

In Figure 2 the data is modelled in a graph, with nodes for the core object types, and edges for relationships between them.

Representing the data as a graph enables numerous benefits over relational databases:

Greater Data Complexity: In a graph database, complex interdependent relationships between nodes can be added and removed in an easy to understand way, whereas within a tabular database this becomes exponentially difficult as additional data sources and use cases are introduced. Similarly, in a “NoSQL” document database, it is complex to optimise join operations across documents.

Constant Evolution of Data Model: In a graph, the data model can be modified continually without having to perform costly schema changes, as one would for table-based data. For example in a scenario where a new property is required for a particular relation, this property can simply be added to nodes.

Simpler Relationship-based Querying: A graph database abstracts join complexity and provides a query language that makes it easier to ask relationship-based questions of data. Whereas, performing complex queries over linked tables can be both difficult to understand and hard to optimise.

Graph databases are therefore highly beneficial to specific use cases:

- Fraud Detection

- 360 Customer Views

- Recommendation Engines

- Network/Operations Mapping

- AI Knowledge Graphs

- Social Networks

- Supply Chain Mapping

Graph Database Use Cases

Fraud Detection

Business events and customer data, such as new accounts, loan applications and credit card transactions can be modelled in a graph in order to detect fraud. By looking for suspicious patterns of customer activity metadata and cross-referencing with previously identified fraud, we can flag up potential fraud that may be ongoing.

Specifically, we extract entities from a large database of business activities, make relationships between them, and model them in a graph. We can then use a technique called “Entity Link Analysis” to identify suspicious links between types of entities that may indicate fraudulent behaviour.

Typically in a fraud detection graph we will use entities from people such as names and dates of birth, as well as contextual entities such as IP addresses, device identifiers and access times. We can then analyse the links between these entities and mark up those that have previously been marked as fraudulent. When entities that are marked as fraudulent start to intersect those that are not marked as fraudulent, suspicions begin to arise. For example, use of multiple bank accounts on a single device that has been previously used to access fraudulent accounts.

Suspicious links can be flagged to be investigated by humans, and also marked as “high-risk” so that future hits can be double-checked.

In addition to manually checking results, automation can be used and continually improved through pattern analysis of identified frauds. By collecting the before and after graph patterns of analysed suspected fraud cases, we can generate inputs for a Machine Learning (ML) training set. As more data flows into the graph we input it into the ML model to flag whether the graph patterns might represent a potential fraud, and either blocked or flagged for human investigation.

360-Degree Customer View

A compelling use of graph databases is in integrating a business’s data from across its estate of data silos. This generates a considered view of the overall landscape, and can be used to improve insight, for example by enabling a “360-degree customer view.”

It is a typical for a business to have data about customers scattered across their estate, and locked into platforms used by particular verticals of the company. Sales in SalesForce, marketing in HubSpot, email tracking in MailChimp, website tracking in Mixpanel and so on. To create a 360-degree customer view, we stream data out of these services into a graph, using a common data model to provide integration.

Previously the sheer scale of integrating so much data into a single graph would have been difficult to provision and scale, however the advent of horizontally scalable graph databases such as DataStax Enterprise Graph and data streaming frameworks such as Apache Kafka has provided a scalable reference architecture that can scale up to even the largest enterprise.

Having a live graph of all aspects known about the customer enables up-to-date rich queries on customers and trends to be performed. Uses include deep dives on particular demographics or sectors of customers, aggregations of behaviour based on marketing events, and so on.

Initially, data may be made available to marketers through rich visual query interfaces, with live views of customer clicks, interactions and purchases, for example during a promotional event. As more features are developed from customer data, machine learning models can be trained to predict the impact of planned marketing activity on customer engagement with the business.

Network Mapping

Infrastructure mapping and inventory is a natural fit for representation as a graph. In particular when mapping relationships between connected physical/virtual hardware and the services that they support. An enterprise will use CMDBs (configuration management databases) and/or service catalogs to keep inventory of their systems. They are used to keep track of components, their purpose, software versions and the interdependencies between them.

A graph of the relationships between infrastructure components not only enables interactive visualisations of the network estate but also network tracing algorithms to walk the graph. For example, algorithms for:

Dependency Management: Identify single points of failure and simulate the impact of their failure on services, to identify cascading failures before they happen.

Bottleneck Identification: Find weak links in network routing that could cause bottlenecks at times of high network utilisation.

Latency Evaluation: Estimate latency across paths in the network, and the impact on services accessed from various geographic regions.

As an infrastructure grows, it can be difficult to assess the total impact on the whole network. Using a graph database, metrics can be run to estimate impact before upgrades are installed, as well as debugging infrastructure issues in a live network. Smart algorithms can identify bottlenecks and recommend routing changes or upgrade paths.

At 6point6 we have in-depth experience delivering graph database solutions for our clients, and maintaining them using our DataOps methodology.

For more information please contact us.

Written by Dr. Daniel Alexander Smith, Lead Big Data Architect